NVIDIA-Powered Research Agent

Overview

The NVIDIA-Powered Research Agent is designed as an enterprise-grade research assistant using NVIDIA’s AIQ Blueprint.

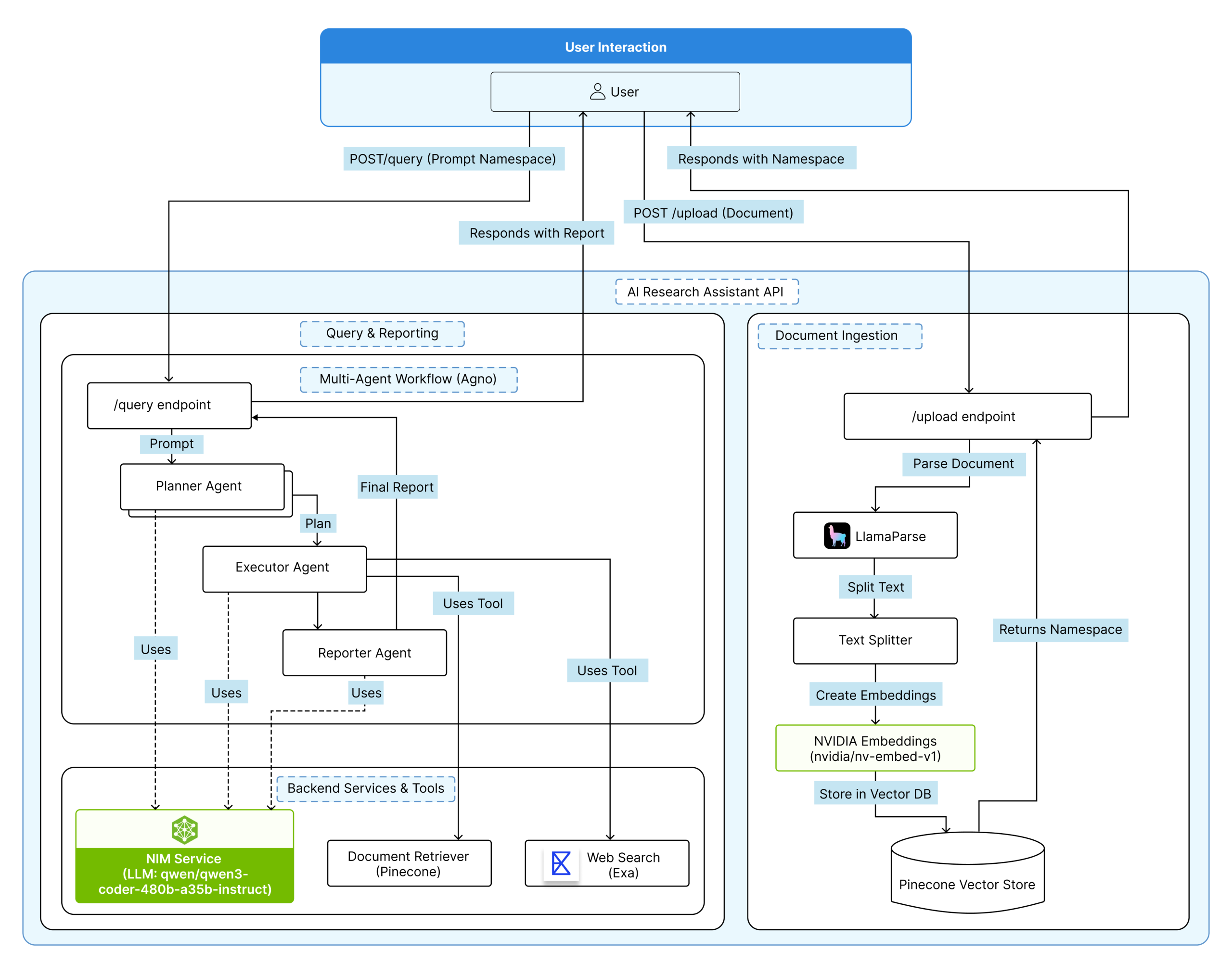

At its core, the system is a Retrieval-Augmented Generation (RAG) pipeline orchestrated by a collaborative multi-agent framework and powered by NVIDIA NIM inference microservices.

The architecture ingests unstructured documents (PDFs, DOCX) via a React UI, using LlamaParse and NVIDIA Embeddings to build a queryable knowledge base in Pinecone. When a user asks a question, the multi-agent system initiates a hybrid search, retrieving deep document context from Pinecone and external web intelligence from Exa.

This combined context is reasoned upon by the LLM to generate a comprehensive, consolidated report. This POC demonstrates a modular and secure platform that goes beyond simple Q&A, it delivers an AI that understands context, connects disparate data, and delivers clarity at enterprise speed.

Challenges in automating research workflows

Enterprises face several challenges in automating research workflows including handling complex, unstructured documents, ensuring semantic understanding during search, coordinating multi-agent reasoning without latency, and maintaining strict data privacy. Additionally, balancing inference performance with scalability, incorporating real-time external data, and achieving transparency in AI-driven decisions add to the system complexity.

Our Solution – NVIDIA-Powered Research Agent

We built NVIDIA-Powered Research Agent, an intelligent assistant that automates enterprise research by understanding documents, finding relevant information, and generating concise reports. It simplifies complex data analysis, ensures privacy, and delivers accurate insights in seconds. Designed for scalability and transparency, it helps teams save time, reduce manual effort, and make faster, data-driven decisions.

Ecosystem and Architecture

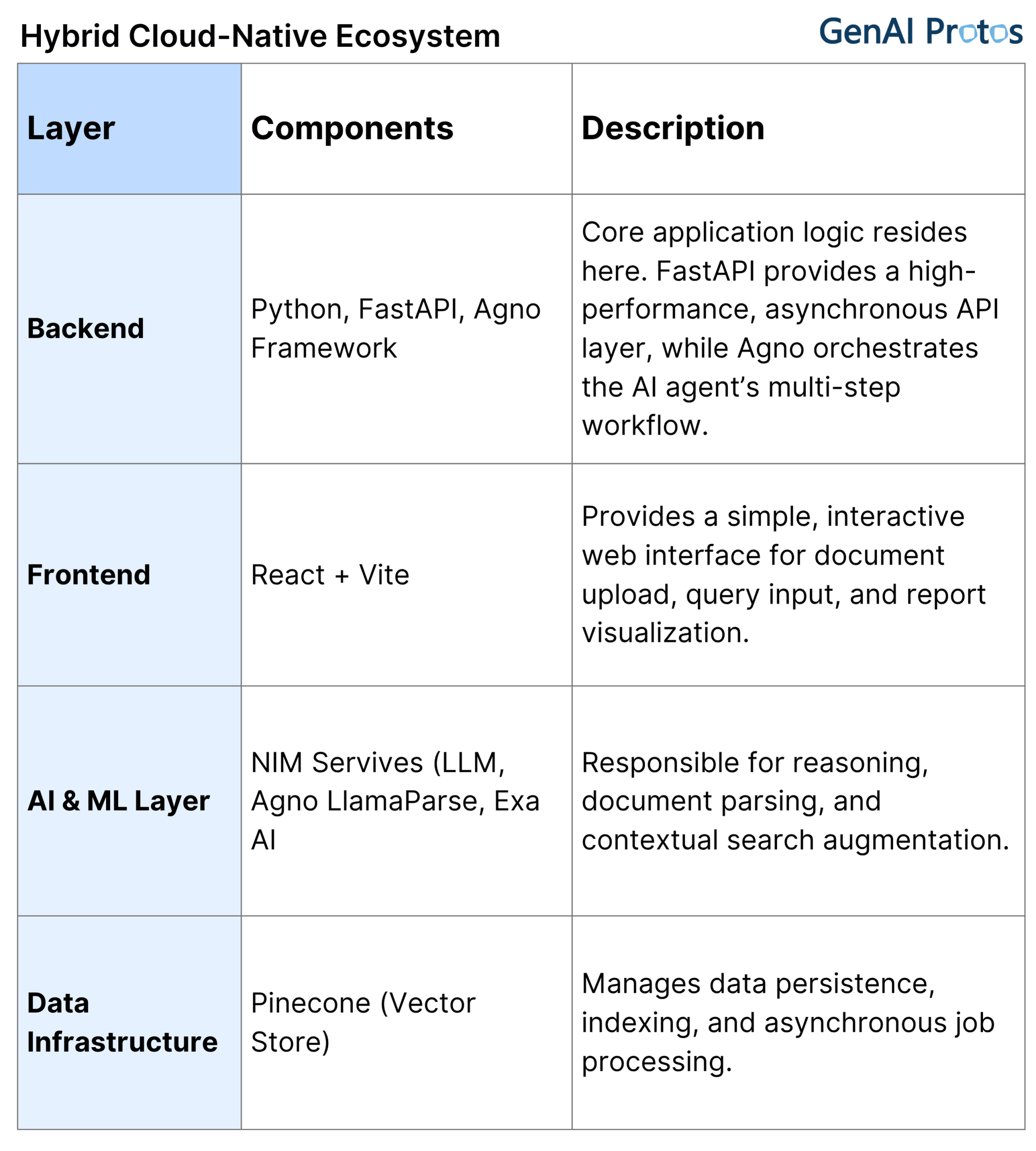

The project is designed on a hybrid AI ecosystem that combines the flexibility of custom code with the scalability of managed cloud services.

System Architecture

The architecture is based on a hybrid AI ecosystem, optimized for performance, extensibility, and modular design. It consists of three primary layers:

1. Frontend Layer (User Interaction)

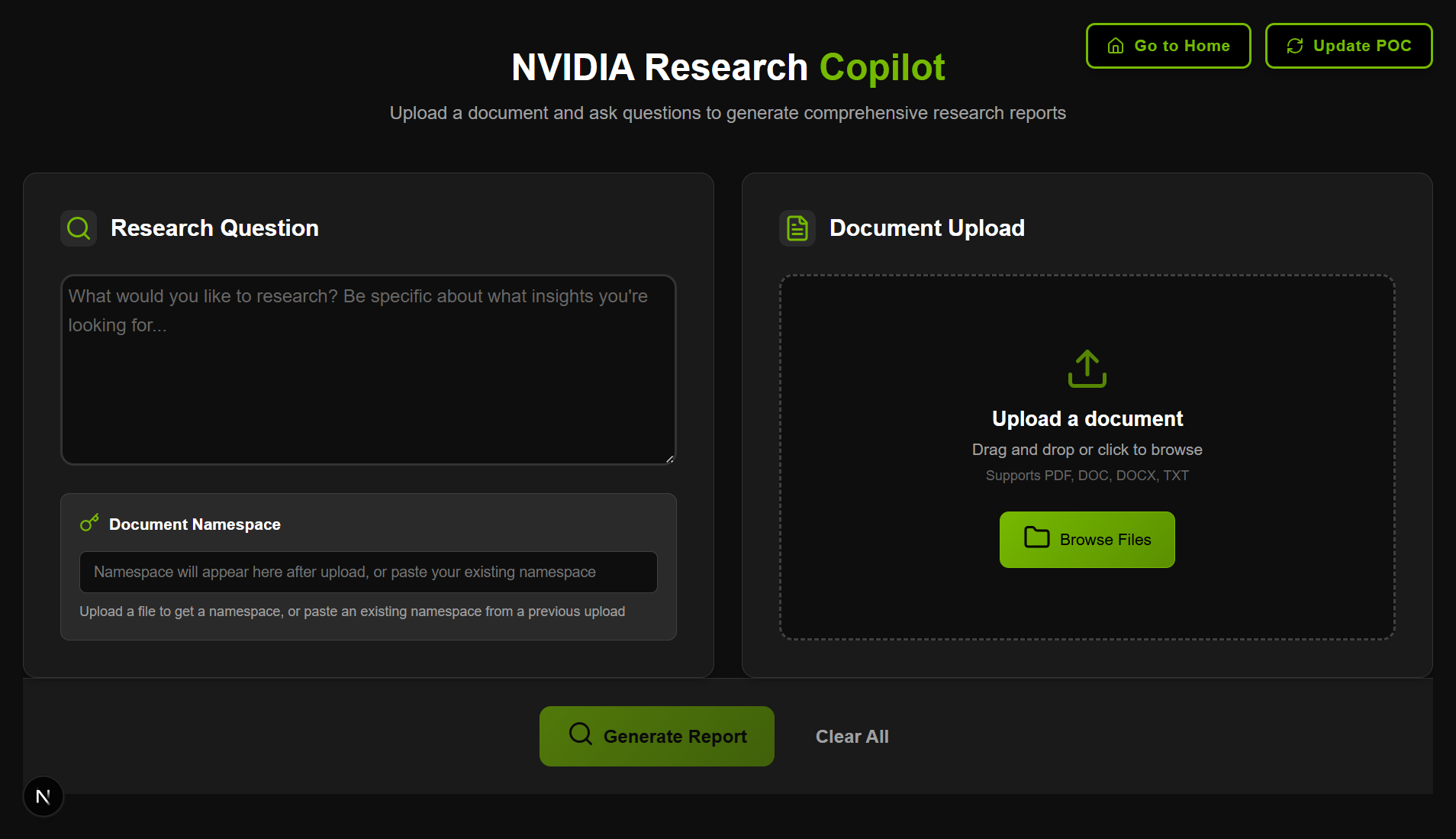

The interface is developed using React and Vite, ensuring a fast, responsive, and modern user experience. It enables users to:

- Upload multi-format documents (PDF, DOCX, TXT, etc.)

- Submit natural-language research queries

- View AI-generated structured reports in real time

Communication between frontend and backend occurs via secure RESTful APIs built on FastAPI.

2. Backend Layer (Processing & Orchestration)

The backend, developed using FastAPI, serves as the central control system that manages file ingestion, AI agent orchestration, and data storage. It features:

- Asynchronous I/O for optimized request handling

- CORS middleware for secure cross-origin communication

- Error handling and logging for reliability and observability

This layer integrates tightly with the Agno framework, enabling agent orchestration and reasoning control. Each AI agent is configured to perform a specific function within the workflow ensuring clarity, modularity, and efficiency.

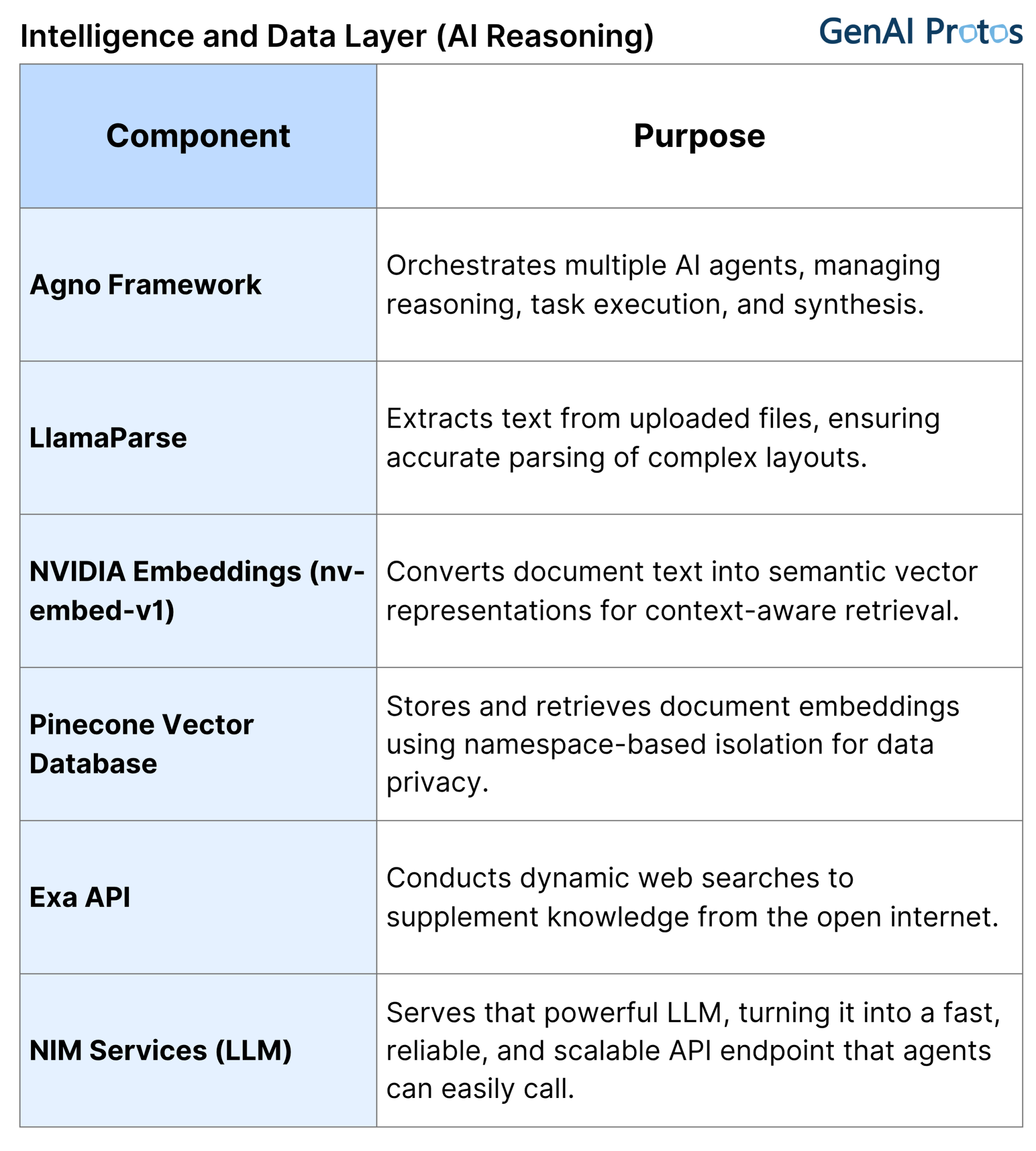

3. Intelligence and Data Layer (AI Reasoning)

Technical Workflow

The system follows a Plan–Execute–Report (PER) model a structured multi-agent reasoning workflow.

Step 1: File Upload and Text Processing

- User uploads a document via the frontend.

- LlamaParse extracts and converts document data into text.

- The text is split into semantic chunks using RecursiveCharacterTextSplitter.

- Each chunk is transformed into a 4096-dimensional vector embedding using NVIDIA’s nv-embed-v1 model.

- These embeddings are stored in Pinecone under a uniquely generated namespace for data isolation.

Step 2: Multi – Agentic Research Workflow

The Agno framework coordinates three specialized agents:

- Plan: A Planner agent reads the user’s question and devises a step-by-step research plan. It decides whether the answer is likely in the document or if it should also search the web.

- Execute: An Executor agent carries out the plan using specialized tools. It queries the Pinecone index on the document content and calls a web search (via the Exa API) if needed.

- Report: A Reporter agent collects all the gathered information and synthesizes it into a coherent, well-structured answer. The final report directly addresses the question in clear language.

Step 3: Real-Time Query Execution and Reporting

When a user submits a query, the backend invokes all agents in sequence. Each agent interacts through NVIDIA’s qwen/qwen3-coder-480b-a35b-instruct model, optimized for reasoning and contextual understanding. The final synthesized report is returned via the API, formatted for clarity and source transparency.

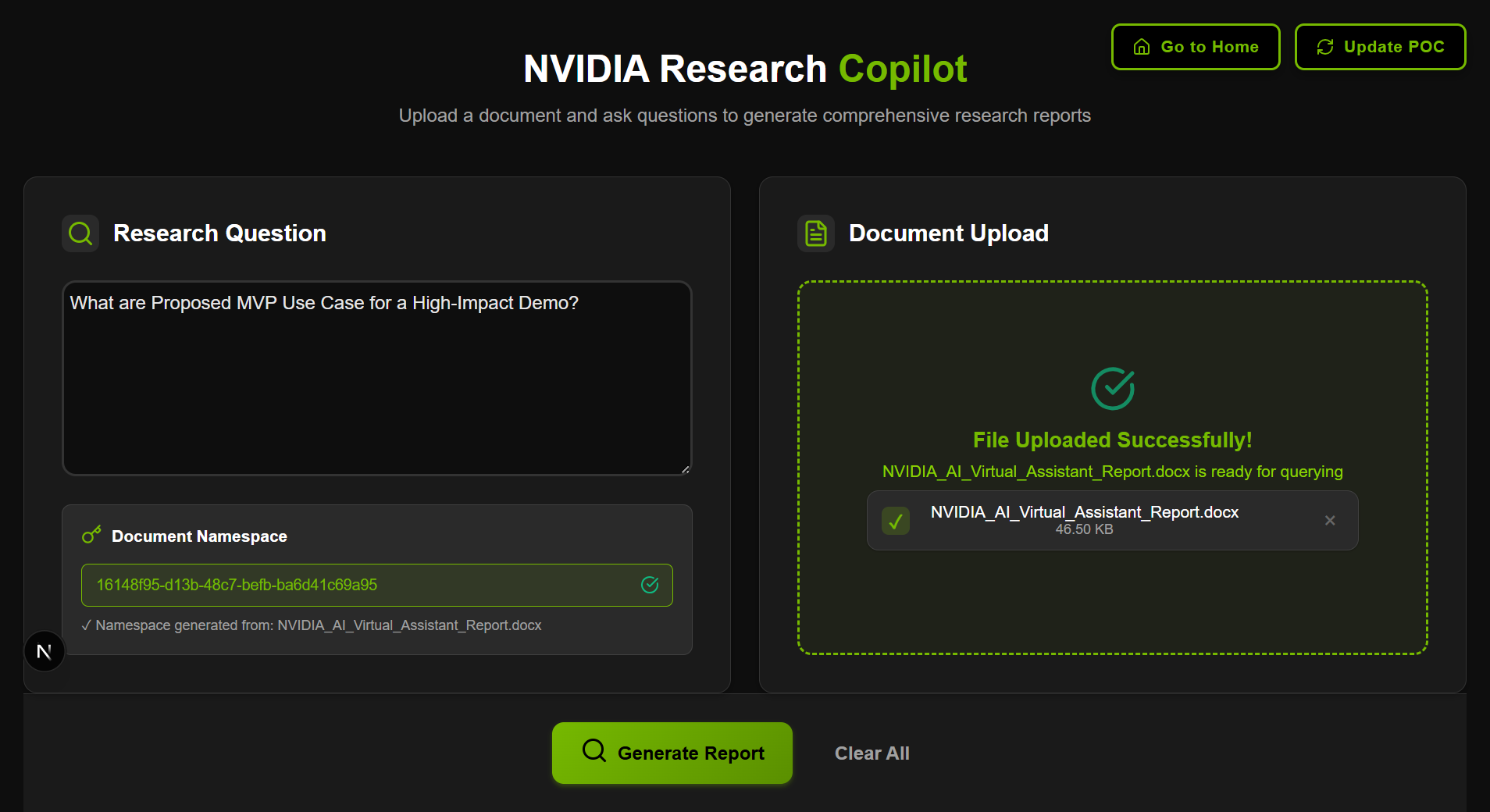

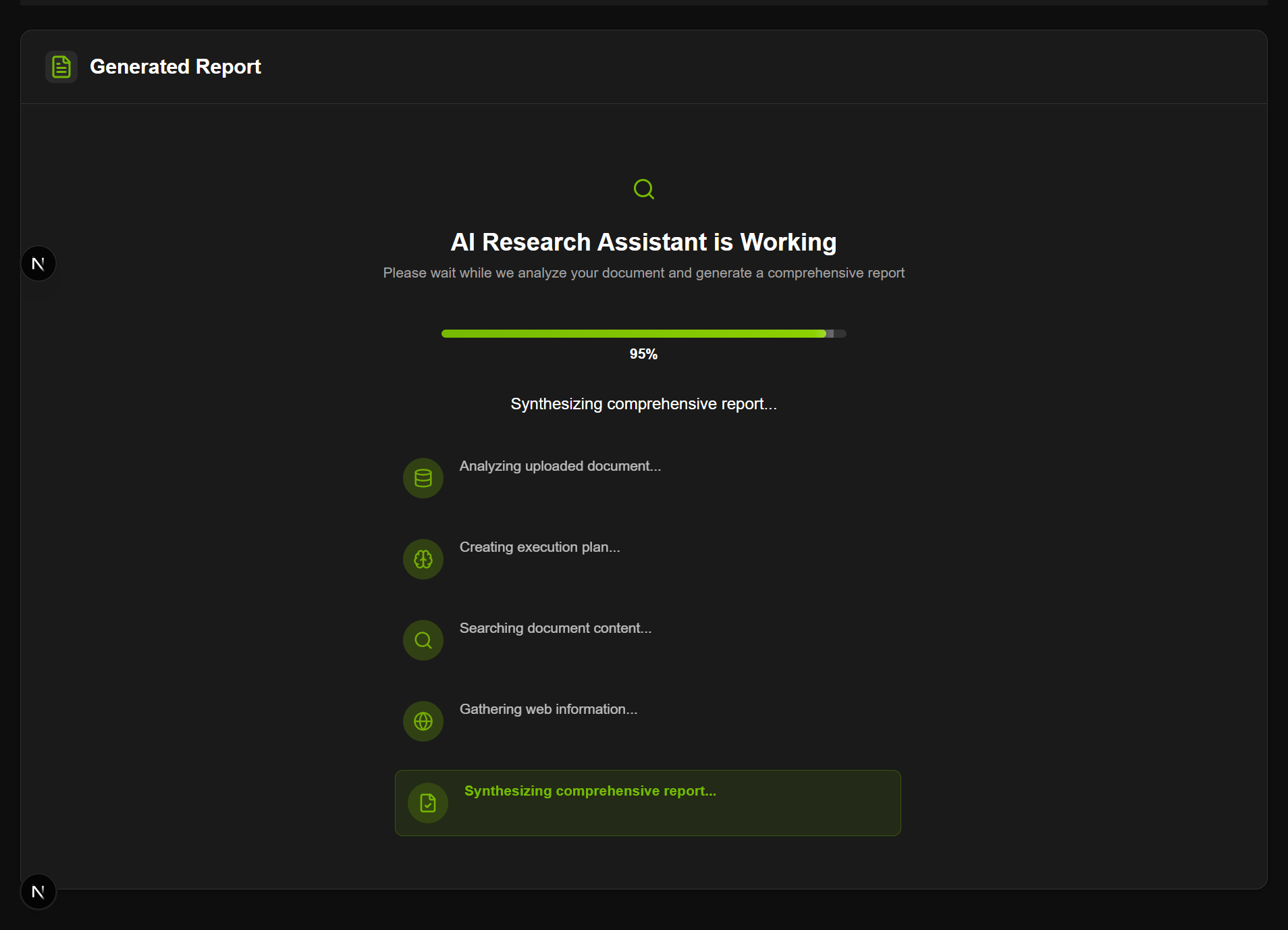

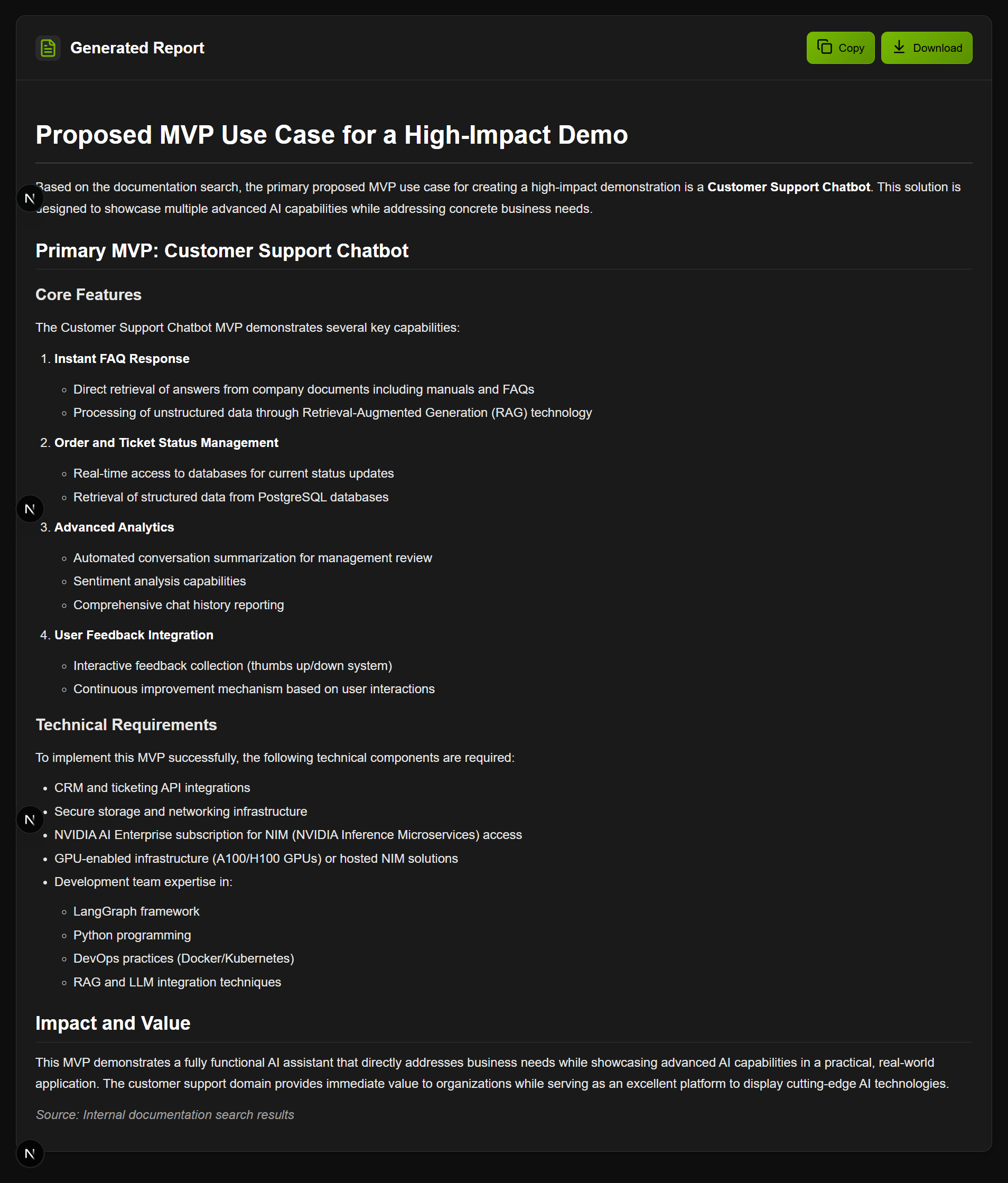

Working Example Screens

User Interface Overview: Upload a document and ask your question directly through a simple, intuitive dashboard.

Real-Time Query Execution: View live progress as the AI agent plans, searches, and compiles answers step by step.

Instant Research Report: Get a structured, ready-to-use report instantly after the agent completes its analysis.

Core Features and Capabilities

- Multi-Format Support: Upload and analyze PDF, DOC, DOCX, or TXT files instantly.

- Multi-Agent Intelligence: Planner, Executor, and Reporter agents collaborate through a Plan–Execute–Report workflow.

- Hybrid Search: Combines deep document understanding (via NVIDIA embeddings + Pinecone) with live web results (via Exa API).

- Real-Time Updates: Watch the agent’s reasoning progress step-by-step on the web dashboard.

- Smart Reporting: Get clean, well-structured Markdown reports ready to read, copy, or download.

- High-Performance Design: Built with FastAPI and React+Vite for scalable, low-latency performance.

Benefits

- Efficiency: Reduces research time from hours to minutes by automating document review and contextual synthesis.

- Accuracy: Embedding-based search ensures retrieval of semantically relevant information.

- Privacy: Keeps data isolated and secure via Pinecone namespaces.

- Explainability: Multi-agent workflow provides interpretable steps in reasoning.

- Scalability: Easily adaptable to enterprise-scale workloads and additional AI tools.

The AI Agent for Enterprise Research represents the next evolution of enterprise knowledge automation combining deep document intelligence with external contextual awareness. By merging NVIDIA Blueprint AIQ, FastAPI, Agno, NIM (LLM), NVIDIA embedding models, EXA and Pinecone, it transforms how teams extract and understand insights from vast data sources.