Fully Local Multilingual Smart Voice Agent on NVIDIA DGX Spark

The landscape of enterprise AI is rapidly advancing, with voice-driven applications at the forefront of digital transformation. Deploying robust, scalable voice agents requires an integrated approach to local inference, orchestration, and model optimization. This article presents a technical overview and deployment guide for a multimodal voice agent architecture, powered by the NVIDIA DGX Spark platform, and optimized for real-time, multilingual, and multi-agent scenarios.

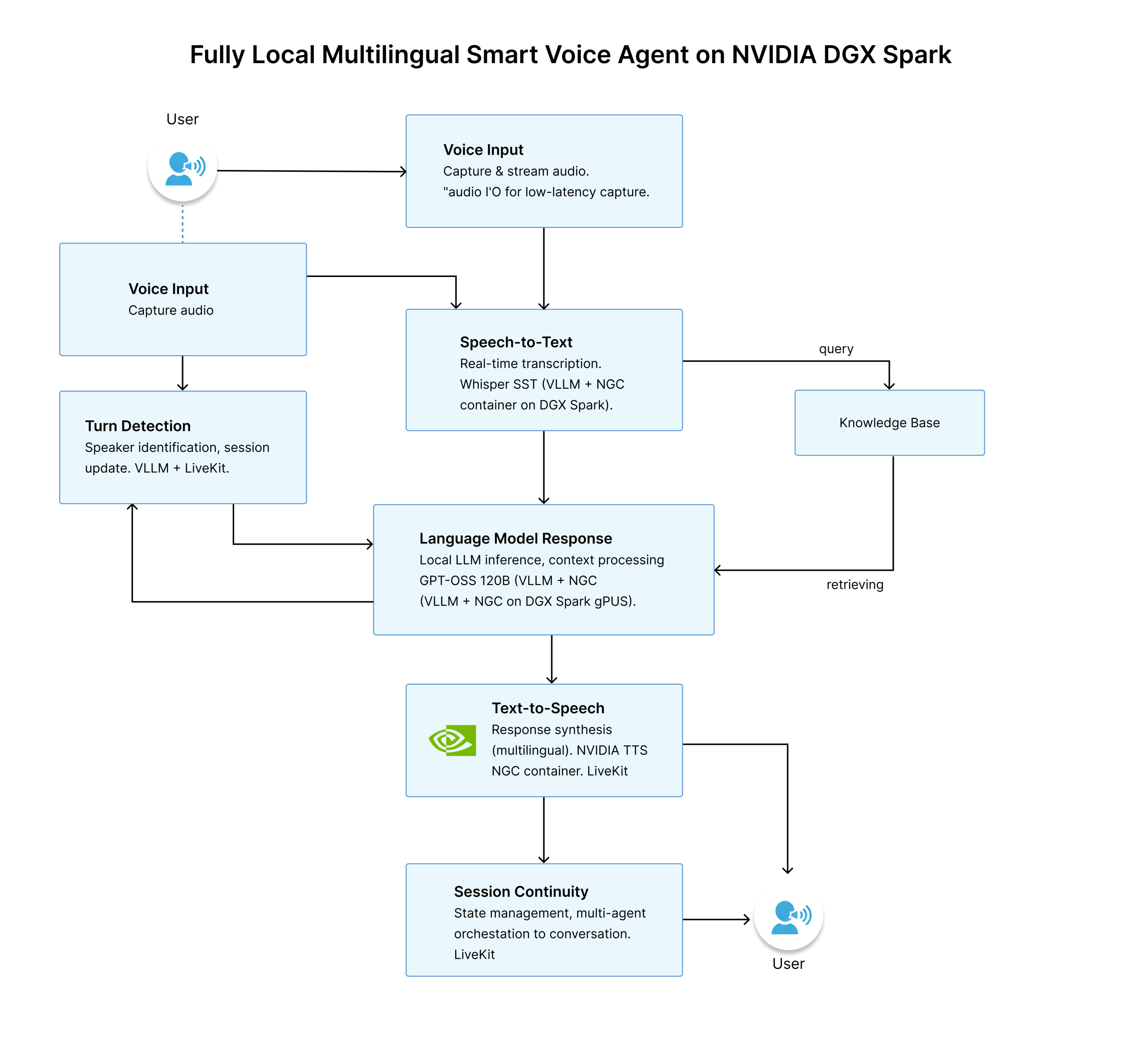

Overview of Multilingual Smart Voice Agent

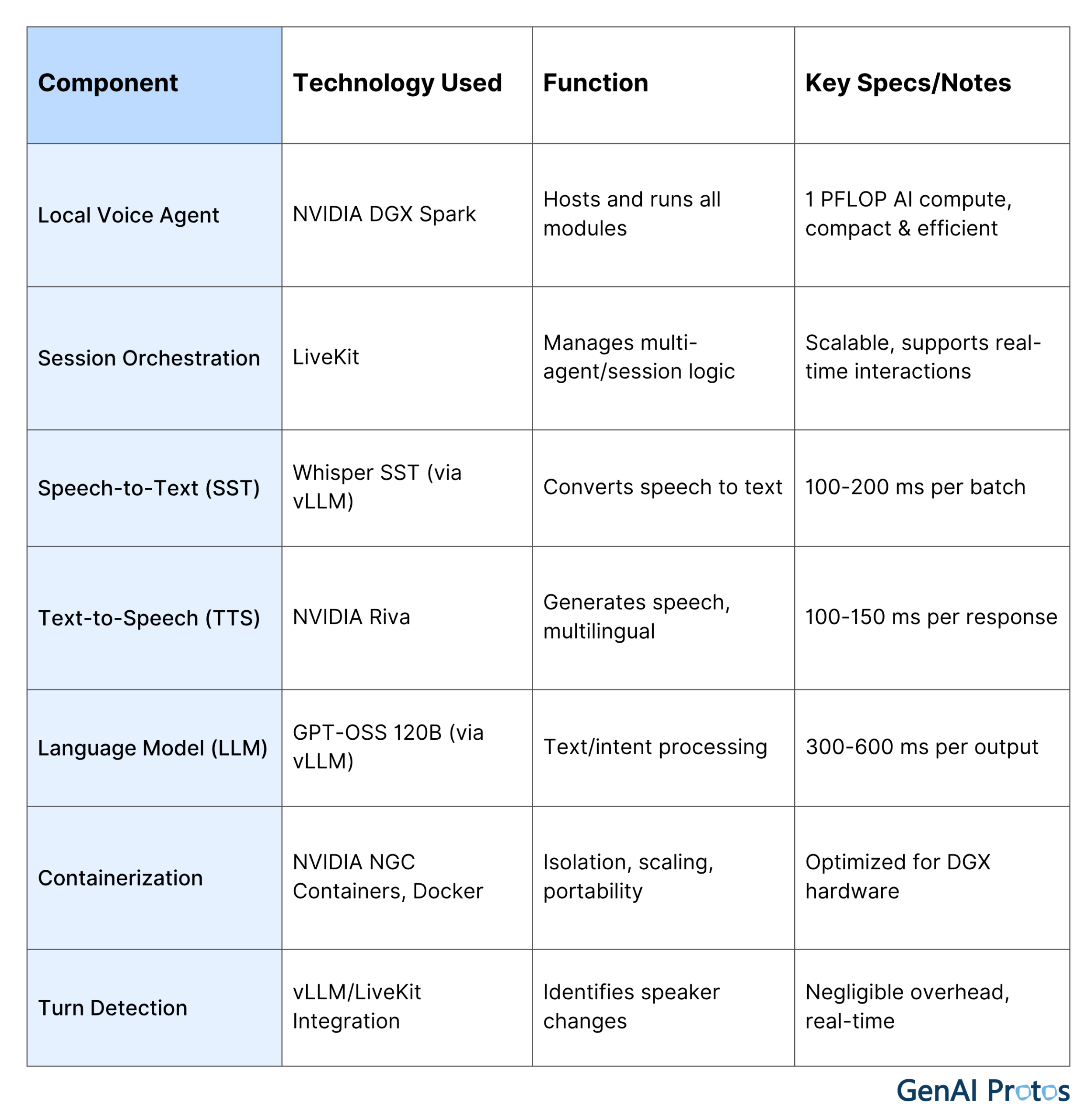

The prototype uses an NVIDIA DGX Spark system for local voice AI application deployment. Utilizing high-volume workflow keywords such as “real-time voice agent deployment,” “multilingual AI,” and “enterprise speech automation,” this architecture ensures enhanced engagement and interaction quality. Key workflow components include:

- Real-time session orchestration using LiveKit.

- Speech recognition through Whisper SST (Speech-to-Text).

- Multilingual text-to-speech via NVIDIA Riva.

- Large language model inference via GPT-OSS 120B, enabled locally.

- Efficient inference and containerization using vLLM and NVIDIA NGC containers.

- Support for features like automated turn detection and dynamic language output.

Architecture and Technical Components

The deployment is localized on NVIDIA DGX Spark, integrating all essential AI components within a containerized environment:

- vLLM Containerized Inference: Orchestrates inference for Whisper SST, GPT-OSS 120B, and Riva TTS models inside dedicated NGC containers.

- LiveKit Voice Orchestration: Manages real-time audio sessions and multi-agent communication, ensuring seamless handover and turn-taking.

- Auto Turn Detection: Real-time speaker management is optimized, minimizing latency and improving conversational agent flow.

- Multilingual Output: Both the TTS engine and LLM support multiple languages, expanding the use case versatility for global deployment scenarios.

Deployment Strategy on NVIDIA DGX Spark

To maximize system performance and manage infrastructure complexity:

- All AI models are containerized via NVIDIA NGC containers, running on Docker with hardware acceleration.

- vLLM coordinates inference workloads for speech-to-text, language generation, and speech synthesis.

- LiveKit is configured for advanced session management, enabling multi-agent and conversational continuity.

- Local networking configurations prioritize ultra-low latency and secure communication between containers and orchestration services.

Hardware Environment and System Dependencies

The DGX Spark platform provides a high-efficiency, small form factor system, delivering enterprise-grade AI compute capabilities:

- CPU: 20-core Arm processor with Armv9 architecture, split between performance and efficiency cores.

- GPU: Grace Blackwell architecture (GB10 Superchip), equipped with advanced Tensor and RT cores for accelerated AI workloads.

- Memory: 128 GB unified LPDDR5x for high-throughput performance.

- Storage: 4 TB NVMe SSD, supporting rapid data access and self-encryption.

- Networking & Connectivity: 10 GbE Ethernet, Wi-Fi 7, Bluetooth 5.4, ConnectX-7 Smart NIC (up to 200 Gbps), USB-C, and HDMI.

- Performance: Up to 1 PFLOP at FP4 precision, supporting models exceeding 200B parameters (expandable with dual-unit configurations).

- Physical & Power Specs: Compact chassis, 1.2 kg weight, advanced thermal design, and efficient power architecture supporting 240W supply.

Interaction Workflow and Featured Capabilities

Interaction within this multimodal voice AI pipeline is streamlined for real-time operation:

- Voice Input: Captured and transcribed by Whisper SST via vLLM for immediate language processing.

- Auto Turn Detection: Speaker changes are identified, maintaining a natural dialogue flow.

- LLM Processing: GPT-OSS 120B generates intelligent responses, leveraging GPU acceleration for efficient turnaround.

- Speech Synthesis: NVIDIA Riva TTS converts responses into natural, multilingual speech output.

- LiveKit Orchestration: Maintains session context and supports multi-agent scenarios, enhancing conversational agency.

Performance Benchmarks and Operational Insights

- Latency:

- Speech-to-text: ~100–200 ms per utterance batch.

- LLM inference: ~300–600 ms per response.

- Text-to-speech: ~100–150 ms per utterance.

- Total end-to-end response: 600 ms–1 second (near real-time).

- Utilization:

- GPU utilization exceeds 80% under peak loads for LLM and speech tasks.

- CPU/memory overhead is moderate due to platform optimizations.

- Scaling:

- Each DGX Spark can serve as a node for on-prem deployment or hybrid cloud architectures.

- NGC containers allow repeatable scaling and rapid updates.

Multilingual and Multi-Agent Capabilities

- Multilingual Inference: Both text-to-speech and LLM inference support multiple languages, making the solution globally adaptable.

- Auto Turn Detection: Intelligent turn-taking powered by real-time speaker analysis maintains natural conversation flow, with no perceptible impact on system speed.

Final Notes

For enterprise AI leaders and technical practitioners, this architecture provides a blueprint for deploying scalable, efficient, and feature-rich voice agents in on-premises environments. By combining advanced container orchestration, high-performance hardware resources, and state-of-the-art AI models, organizations can achieve superior conversational AI experiences optimized for engagement, responsiveness, and global reach.