Our Solutions

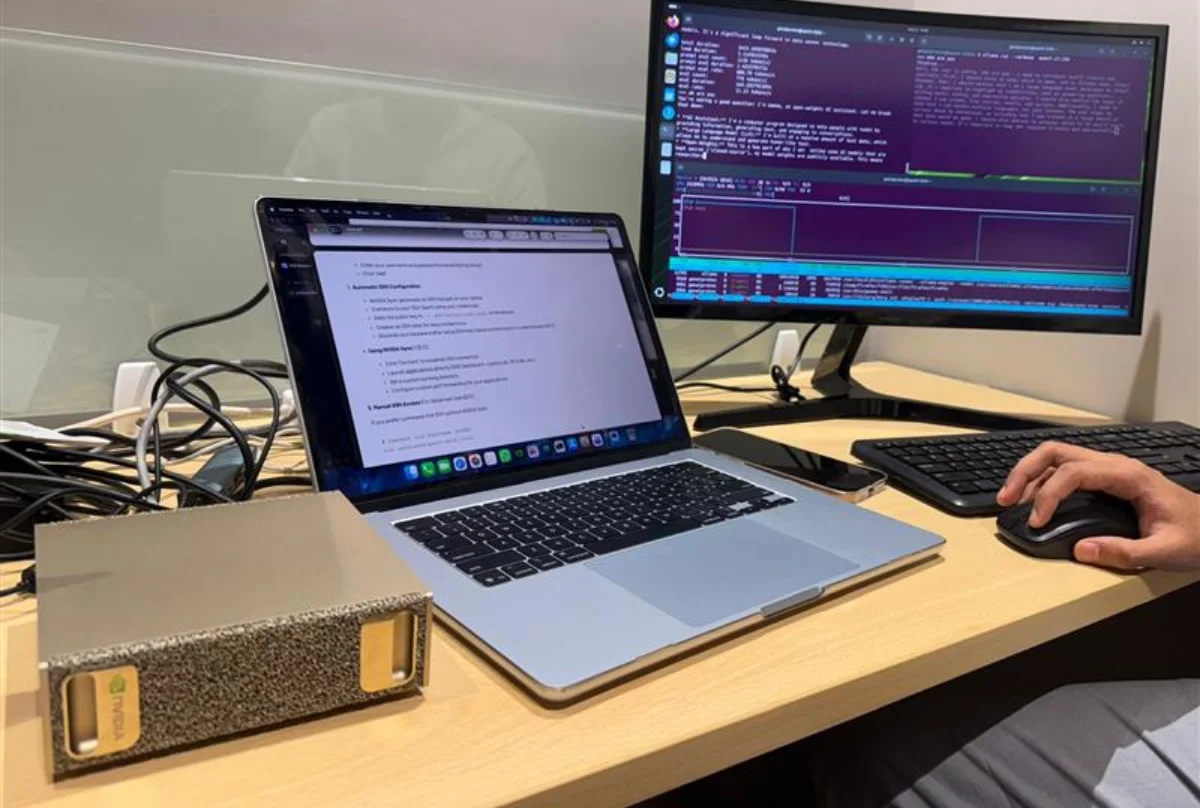

See how we turn powerful AI models into self-contained systems that run anywhere - even offline.

Edge AI Highlights

-

No Cloud Needed

Your data never leaves your network - perfect for regulated industries.

-

Zero Token Cost

Predictable ROI with no per-call API costs.

-

Low Latency

Real-time inference with no network lag.

-

Private LLM's

Deploy RAG and fine-tuned models locally.

-

Offline AI Assistants

Work even without internet.

-

Massive Edge Compute

Powered by Jetson, Thor, or DGX Spark.

How We Build

We design for speed and clarity. Every engagement starts with a proof of value and

ends with a production-ready deployment.

Service Highlights

-

Remote Deployment

100% global delivery - fully remote & secure

-

Strict NDA's

Enterprise-grade confidentiality & IP protection

-

Intuitive UI

Simple dashboards for non-technical teams

-

Cross-Platform

Works with sensors, cameras, and databases

-

End-to-End Support

From device setup to production rollout

-

Fast Turnaround

Prototype in days, MVP in 2 weeks

Why Edge Now

Why the Future Belongs to the Edge

Data Sovereignty & Compliance (HIPAA, GDPR)

Instant Inference & Low Latency

No Cloud or Token Costs

Hardware-Accelerated Performance

Environmentally Efficient

Complete Control & Privacy

Frequently Asked

Questions

AI that runs directly on your device, ensuring full privacy and real-time results.

NVIDIA Jetson Nano, Orin, Thor, and DGX Spark — plus compatible edge servers.

Yes, we optimize open-weight models like Llama, Phi, and Gemma for offline use.

Typically in 3–5 days, with an MVP ready in 2 weeks.

Absolutely — we build user-friendly UIs, APIs, and connectors.