Enterprise Edge AI Development & Real-Time AI Inference Solutions

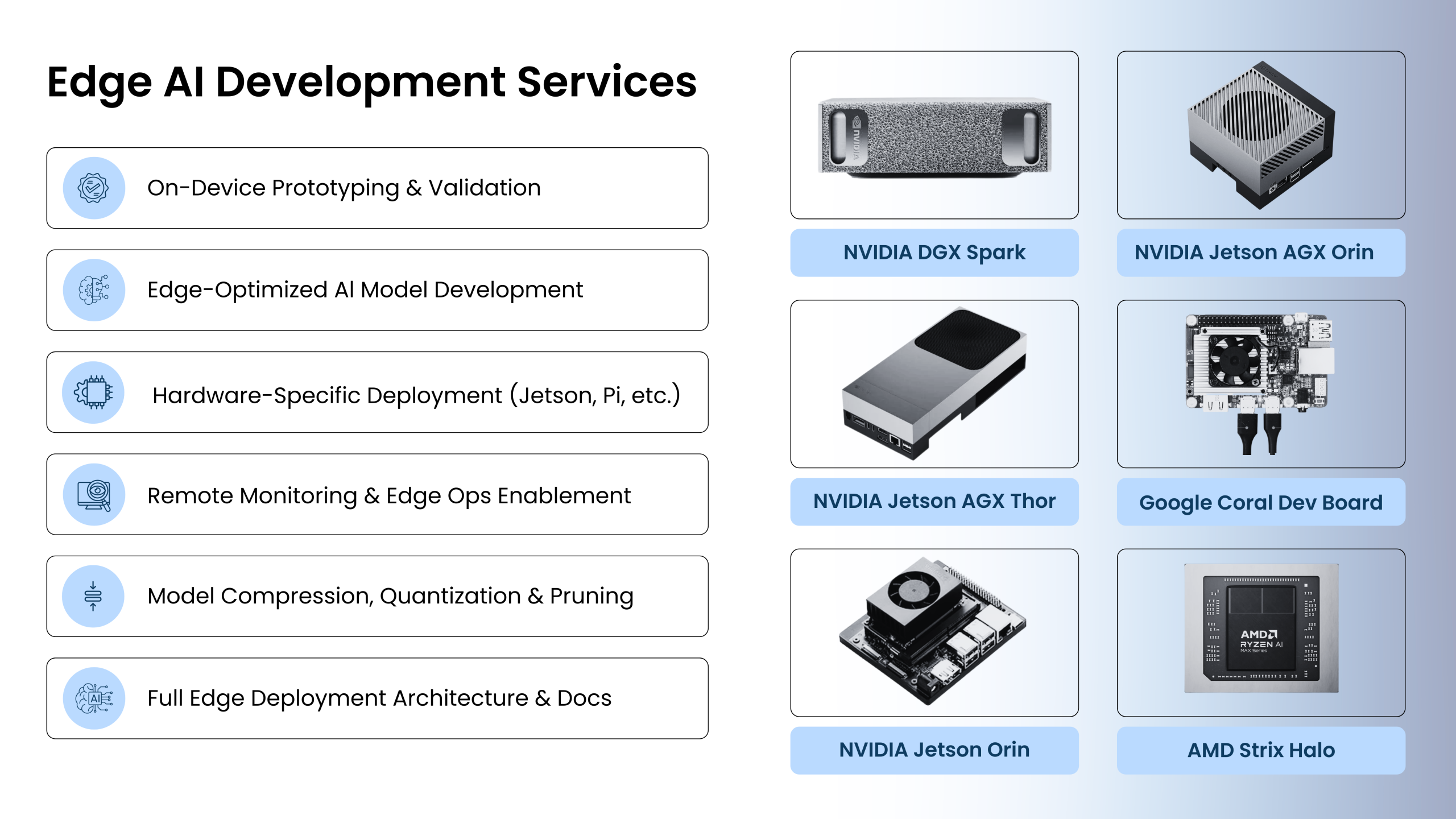

The Edge AI market is projected to reach $66.47 billion by 2030 (21.7% CAGR), driven by demand for real-time processing, enhanced privacy, and autonomous operation. Our Edge AI development services lead this innovation, deploying intelligence across the full hardware spectrum – from TinyML microcontrollers to NVIDIA DGX Spark desktop AI supercomputers.

We develop optimized AI models for on-device execution using advanced quantization, pruning, and knowledge distillation techniques, ensuring high performance on NVIDIA Jetson, Google Coral Edge TPU, Intel Movidius, AMD Kria, and Qualcomm platforms. Our IoT edge AI solutions deliver real-time AI inference with ultra-low latency – critical for autonomous systems, industrial automation, and 5G MEC applications.

Our expertise spans on-device LLMs (up to 200B parameters), generative AI at the edge, TinyML development, and Edge MLOps. We build embedded AI solutions, privacy-preserving AI with federated learning, and hybrid edge-cloud architectures that maximize data security and enable smart, resilient systems at the edge.

Solving Modern Edge Challenges

- Limited Edge AI development and on-device AI deployment expertise

- Edge AI framework and hybrid architecture design challenges

- Privacy-preserving AI and regulatory compliance requirements

- Real-time AI inference needs in offline or remote environments

- Budget constraints for IoT edge AI and 5G MEC projects

- Edge AI hardware selection and optimization complexity

What We Deliver

Strategic Edge AI Architecture & Use Case Design

Define high-impact edge AI applications, hybrid edge-cloud architectures, and 5G MEC deployment strategies for optimal ROI.

Edge AI Application Development (Remote & On-Site)

Custom industrial edge AI, autonomous systems, IoT edge AI development across NVIDIA DGX Spark, Jetson, Google Coral, and TinyML platforms.

Edge AI Setup & Research Support

Platform selection, proof-of-concept development, and feasibility assessment for NVIDIA, Google, Intel, AMD, and Qualcomm edge AI platforms.

Edge AI Testing & Validation

Performance testing, accuracy validation, and hardware compatibility verification for real-time AI inference across edge devices.

Edge AI Integration Services

Seamless integration with IoT infrastructure, industrial systems, 5G MEC networks, and hybrid edge-cloud architectures.

Edge AI Deployment & Maintenance

Production deployment using containerized edge AI, Edge MLOps automation, fleet management, and OTA model updates.

Edge AI Data Pipeline & Storage Engineering

Efficient data pipelines for edge AI with real-time ingestion, preprocessing, edge caching, and privacy-preserving architectures.

Edge AI Training & Enablement

Team training on edge AI development, on-device optimization, TinyML, Edge MLOps, and platform-specific SDKs.

Your Benefits

- Ultra-Low Latency Real-Time AI Inference (<50ms)

- 60-80% Reduction in Cloud Infrastructure Costs

- Enhanced Data Privacy & Security Through Local Edge AI Processing

- 3-10x Performance Improvement Through Hardware-Aware Optimization

- Hybrid Edge-Cloud Architecture for Cost-Optimized AI Deployment

- Optimized Edge AI Performance & Resource Efficiency

Our Work Portfolio

We bring automation, frameworks, and AI-native thinking to help your team do more with less—and do it better.

Private Edge AI for Legal Data Processing

Deployed on-prem Edge LLMs for confidential document and contract analysis. Ensures complete data privacy and compliance with zero cloud exposure.

Edge NLP for Sensitive Enterprise Data

Implemented on-device NLP pipelines for large-volume proprietary datasets. Delivers fast insights and secure in-house processing with full data sovereignty.

Conversational Database Assistant with Edge LLM

Built a local natural-language interface for relational databases. Enables real-time analytics and chat-based data access without cloud dependency.

On-Device Visual Intelligence with Jetson Orin + Gemini-Vision

Deployed real-time edge analytics to interpret live video streams locally. Enables instant scene understanding, motion tracking, and event summarization.

Conversational Edge Robotics Powered by Jetson Orin NX + Qwen VLM

Implemented an intelligent field-robot assistant. The robot understands visual scenes and answers natural questions.

Edge AI for Industrial Safety Compliance

Implemented PPE detection and zone violation alerts using Jetson Xavier cameras ensuring worker safety compliance in hazardous zones, improving safety adherence by 50%.

Healthcare Edge AI for Medical Imaging

Delivered on-device diagnostic inference models for ultrasound and X-ray classification. Ensures patient data never leaves the facility while delivering sub-second diagnostic suggestions.

Edge AI for Retail Shelf Analytics

Deployed AI-powered shelf scanners using Jetson modules to detect out-of-stock items, rack damages, misplaced products, and pricing mismatches improving planogram compliance by 90%.

Why GenAI Protos?

Unmatched Hardware Platform Expertise

Deep expertise across NVIDIA DGX Spark, Jetson Orin, Google Coral Edge TPU, Intel Movidius, AMD Kria, Qualcomm, Hailo, and ARM platforms. Platform-agnostic approach ensures optimal hardware selection for your specific requirements from TinyML microcontrollers to desktop AI supercomputers.

Leadership in Emerging Edge Technologies

Pioneers in on-device LLMs (up to 200B parameters), generative AI at the edge, 5G Multi-Access Edge Computing (MEC), TinyML development, and agentic edge AI. We bring cutting-edge capabilities to your edge deployments.

Mission-Critical Reliability & Field-Tested Performance

Production-grade edge AI with 99.9% uptime in harsh environments. Proven across industrial automation, autonomous vehicles, healthcare devices, smart cities, and critical infrastructure with offline operation and fail-safe architectures.

Complete Edge AI Lifecycle Management

End-to-end ownership from strategy to production. Full-stack capabilities including Edge MLOps, containerized deployment (Kubernetes), hybrid edge-cloud architectures, federated learning, OTA updates, and fleet management at scale.

Advanced Edge AI Optimization & Performance Engineering

Achieve 3-10x performance improvements through AI model quantization (FP4, INT8), pruning, knowledge distillation, and hardware-aware optimization. Specialized tuning for NVIDIA Tensor Cores, Google Edge TPU, Intel VPU, and FPGA acceleration for maximum real-time AI inference performance.

Ready to Build, Optimize, and Deploy Edge AI Solutions?

Unlock real-time intelligence and transform your operations with custom Edge AI development services from GenAI Protos. From TinyML sensors and smart IoT devices to autonomous vehicles, industrial robots, and 5G smart city infrastructure we help you deploy on-device AI that delivers breakthrough performance right where it matters most.

Whether you’re building generative AI at the edge, deploying on-device LLMs for intelligent assistants, implementing real-time AI inference for autonomous systems, or scaling industrial edge AI across factory floors our expertise across NVIDIA DGX Spark, Jetson, Google Coral Edge TPU, and all major platforms ensures your success.