Overview

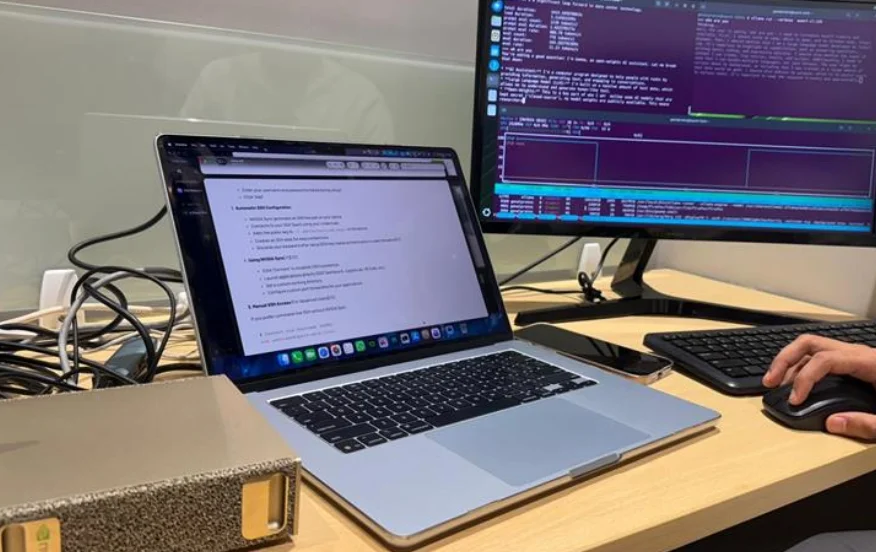

Run powerful large language models directly on NVIDIA Jetson Nano with zero cloud dependency. Our optimized LLM chatbot solution delivers real-time conversational AI with complete data privacy, perfect for secure environments, offline deployments, and cost-sensitive applications.

Technical Specification

Hardware: NVIDIA Jetson Nano (4GB)

Context Window: Up to 4K tokens

Models: Llama 2 7B, Phi-3 Mini, Gemma 2B

Latency: <1 second average response time

Quantization: 4-bit and 8-bit INT formats

Power: <10W typical operation

Explore Solution

Key Highlights

Runs Llama 2, Phi-3, and Gemma models locally

Sub-second response times with optimized inference

Custom RAG integration for domain knowledge

Multi-turn conversations with context management

Zero API costs or token fees

Works completely offline