AI Crawler – Instantly Extract Content from any Website

April 15, 2025

AI Crawler – Instantly Extract Content from any Website

Overview of AI Crawler – a FastAPI-based Web Crawler API

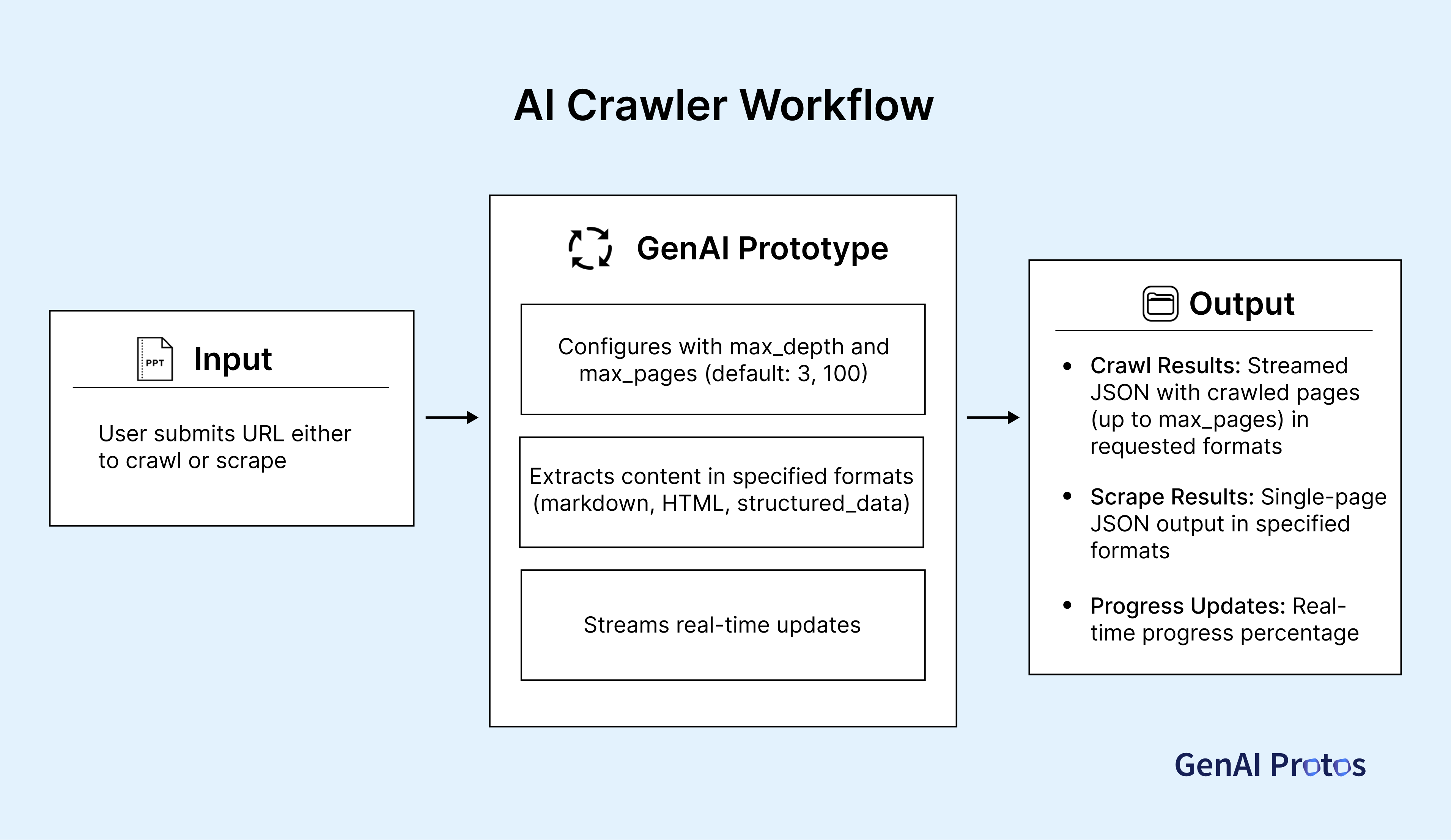

This application, a FastAPI-based Web Crawler API, is designed for extracting content from websites, supporting both single-page scraping and multi-page crawling. It utilizes an EnhancedWebTool to process web data, ensuring efficient and accurate extraction. The API streams results in real-time, offering flexibility in content extraction formats, and is built with robust error handling and logging for reliability. As part of our comprehensive AI prototyping services at www.genaiprotos.com, this tool is essential for gathering and preparing data for various Generative AI applications. Our rapid prototyping process leverages this API to quickly collect and analyze data, enabling us to develop and test AI models efficiently.

Features of AI Web Crawler

- Web Crawling: Enables recursive crawling of websites up to a specified depth and page limit, capturing linked pages systematically. This feature is crucial for comprehensive data collection, which is the foundation for training robust machine learning models in our AI research and development projects.

- Single-Page Scraping: Extracts content from a single webpage, ideal for targeted data retrieval. This is particularly useful in the initial stages of proof of concept (POC) development for new Generative AI use cases, where specific data points are needed to validate ideas.

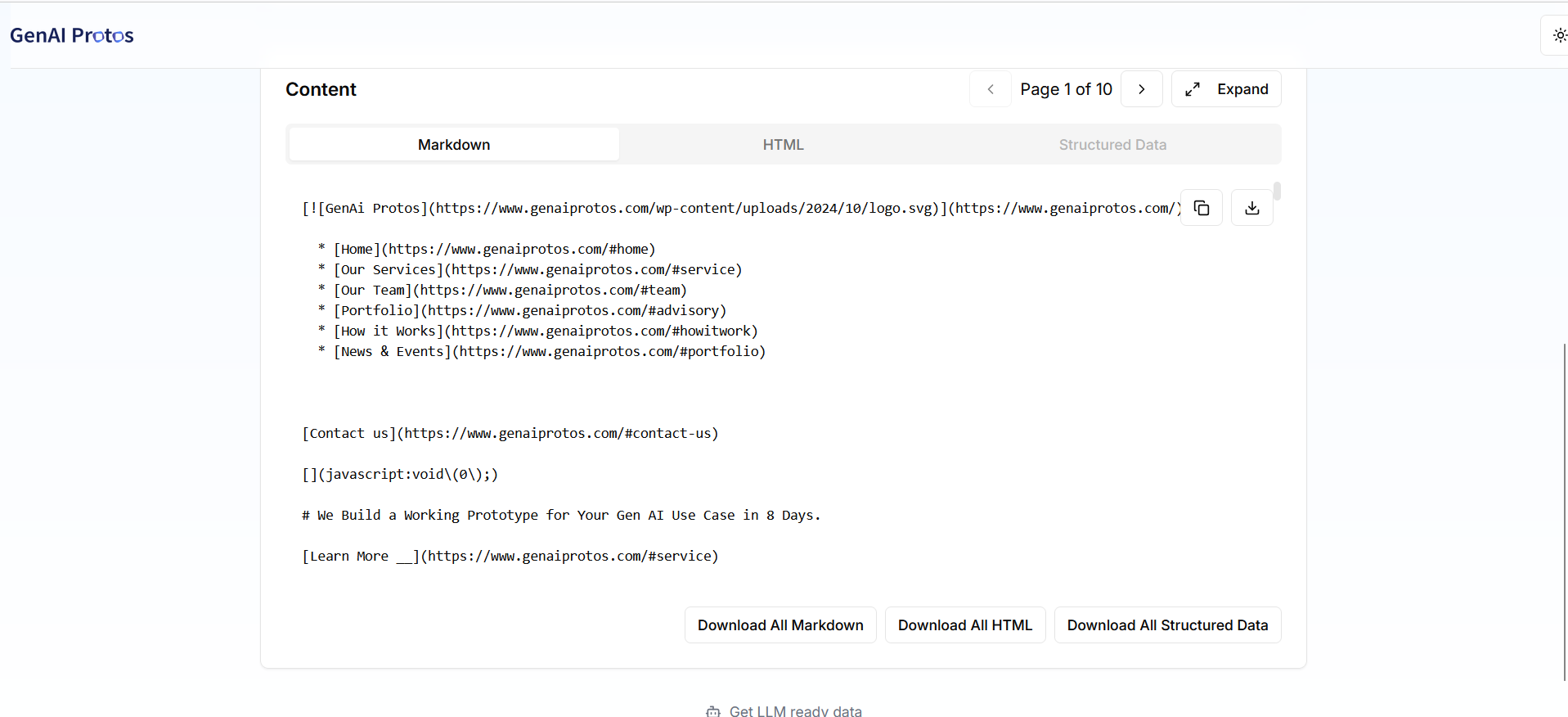

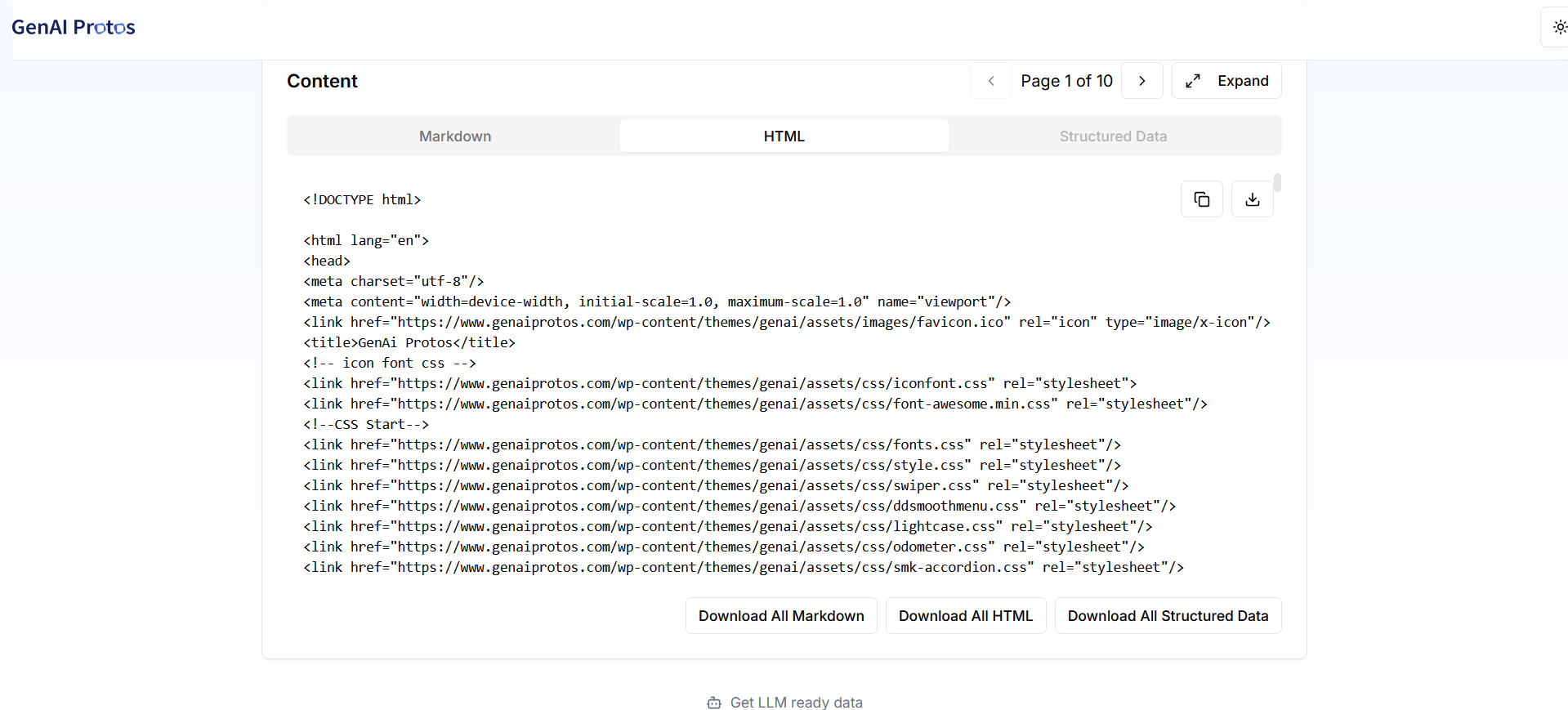

- Format Flexibility: Supports multiple output formats (e.g., markdown, HTML, structured data) to suit diverse use cases. This flexibility allows seamless integration with various data engineering pipelines and AI development tools, enhancing the efficiency of our AI implementation processes.

- Real-Time Streaming: Delivers progress updates and results live via server-sent events, enhancing user experience. In time-sensitive AI applications, such as real-time data analysis or monitoring, this feature ensures that our clients stay informed and can make decisions based on the latest information.

- Error Management: Incorporates comprehensive logging and error reporting for debugging and reliability. Given the critical nature of data quality in AI development, this feature helps maintain the integrity of the data used in our models, ensuring that our Generative AI solutions are built on a solid foundation.

Configure with your own LLMs:

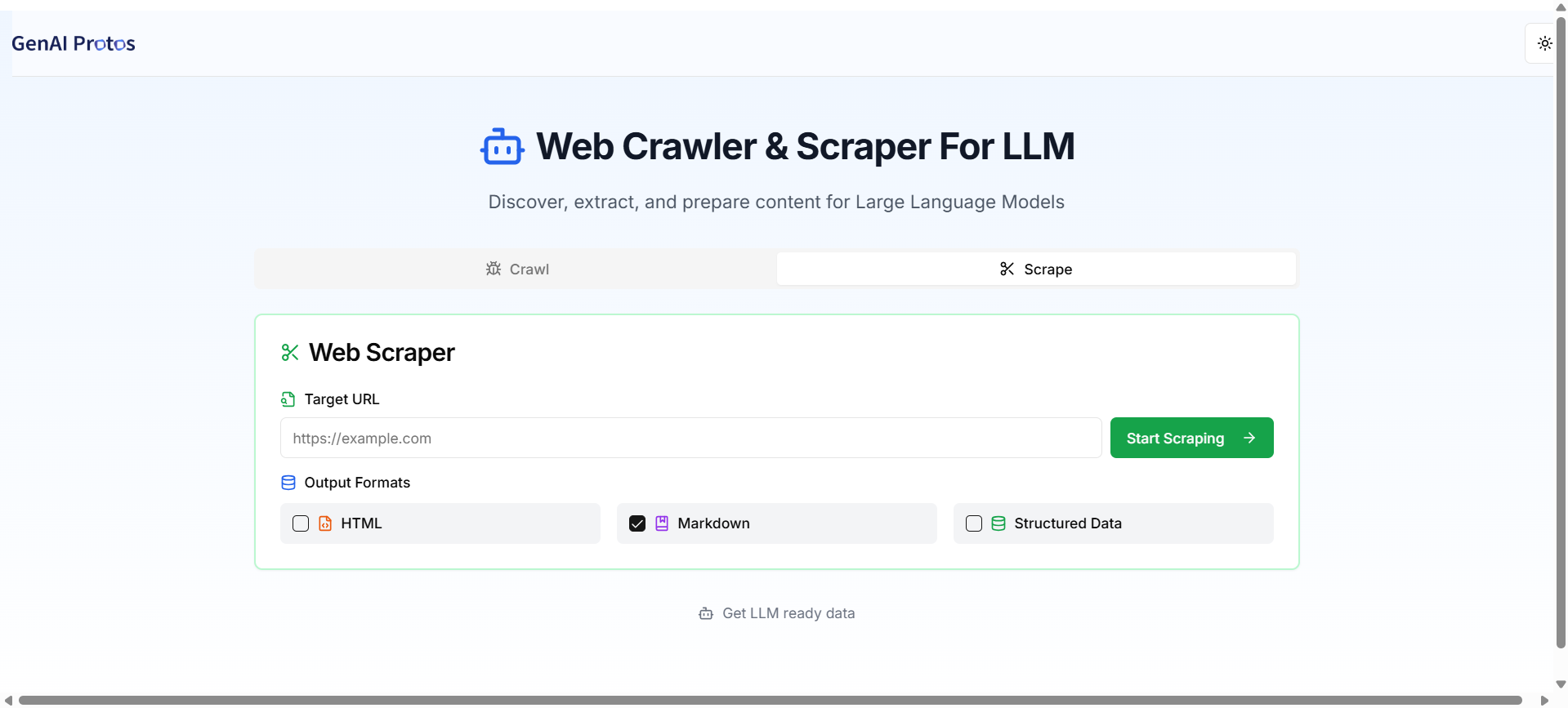

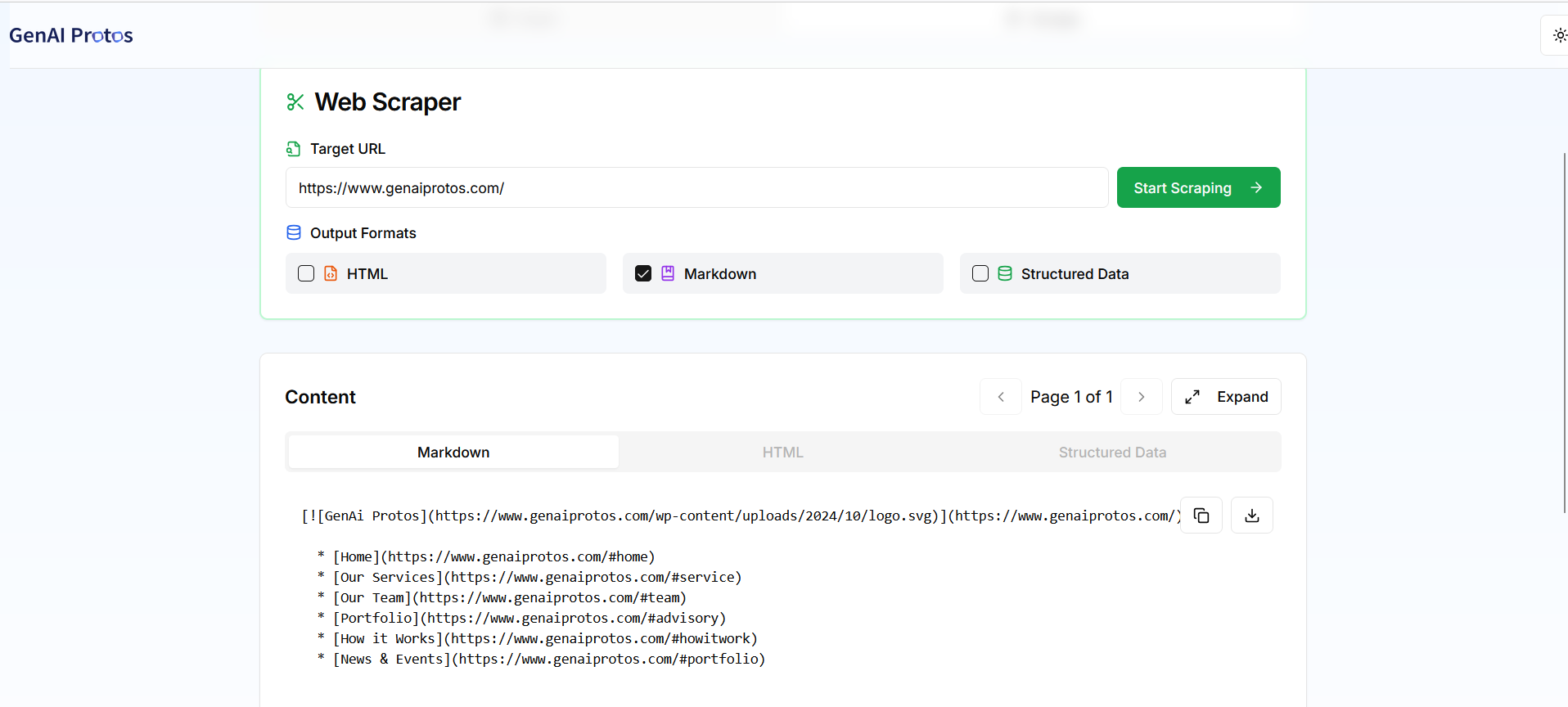

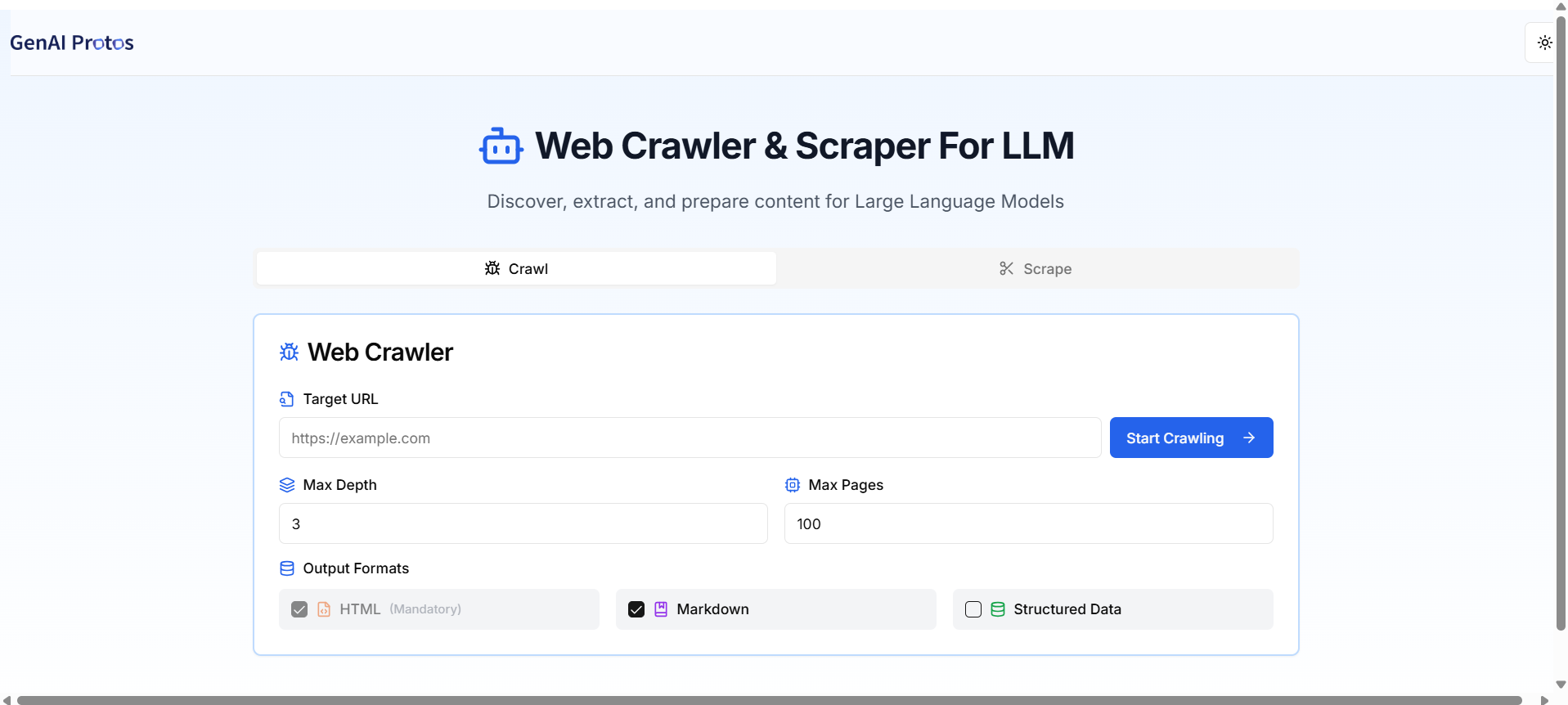

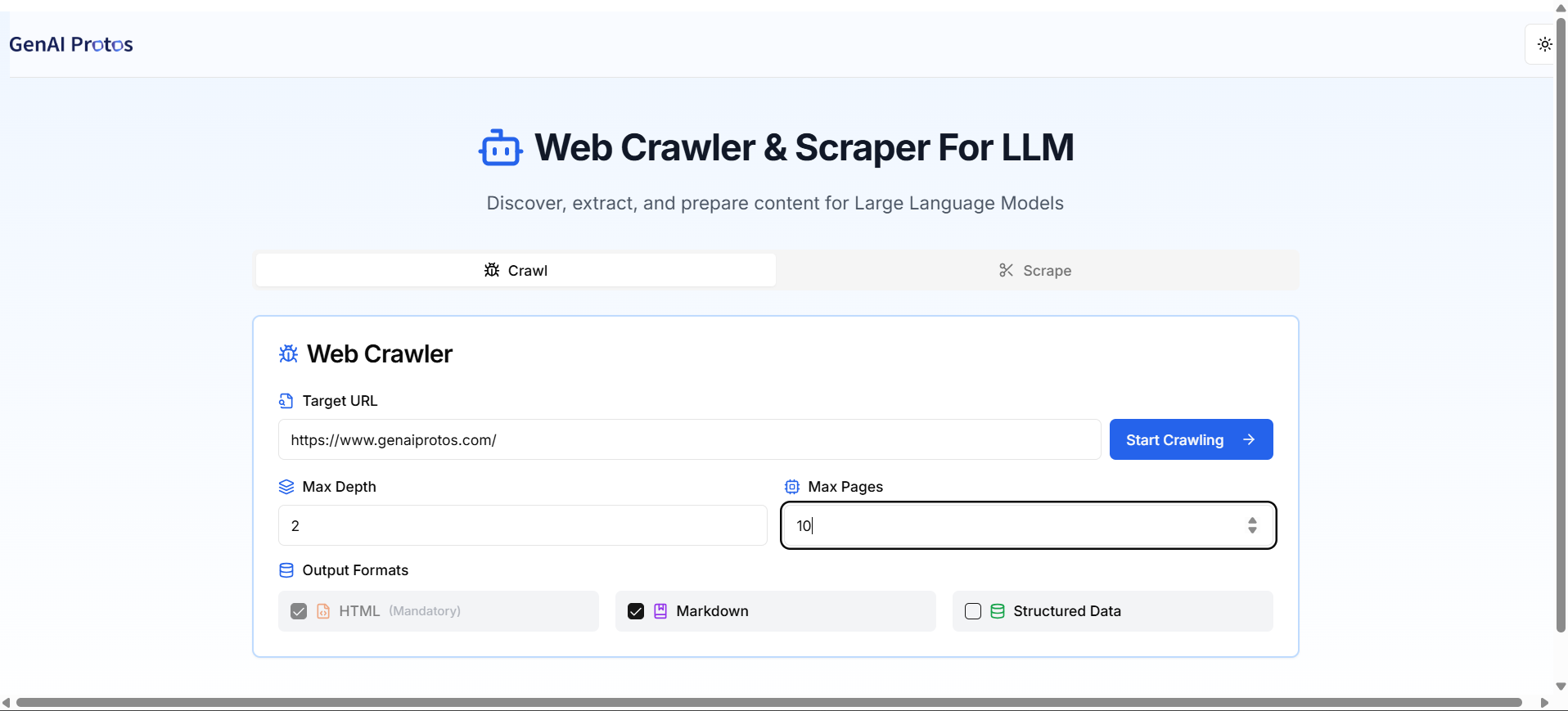

Select Crawl/Scrape Mode

Crawling Process – Enter the URL and max depth of pages to be crawled

Output in HTML, Markdown, Structured or Selected format

Scraper: Enter the URL and select the output format